ắp thành “chính quả” rồi! ❤️ “Chính quả” ở đây hiểu theo nghĩa đen thôi, tức là… kết quả chính yếu! 😃 Sau khi đóng chiếc Serenity xong, loay hoay nhiều tháng trời mới thành công với kỹ thuật “brace” này, là cách dựng thẳng đứng lại chiếc xuồng khi đã bị đánh lật ngang. Xem “bọn Tây” làm sao mà thấy dễ dàng, nhẹ nhàng thế!

ắp thành “chính quả” rồi! ❤️ “Chính quả” ở đây hiểu theo nghĩa đen thôi, tức là… kết quả chính yếu! 😃 Sau khi đóng chiếc Serenity xong, loay hoay nhiều tháng trời mới thành công với kỹ thuật “brace” này, là cách dựng thẳng đứng lại chiếc xuồng khi đã bị đánh lật ngang. Xem “bọn Tây” làm sao mà thấy dễ dàng, nhẹ nhàng thế!

Làm được rồi thì thấy cũng tương đối bình thường, không khó lắm, nhưng quả thực, suốt cả một quá trình, “vô cùng” khó, “phi thường” khó, vì phải tổng hoà nhiều yếu tố: sức mạnh, chiều dài cánh tay đòn, phối hợp tay, vai, lưng, hông, chân, phân bố khối lượng và độ nổi của chiếc xuồng… Góc quay hơi mờ, sẽ post một vài clip khác tốt hơn! 🙂

hèo xuồng kayak có trên 40 kỹ thuật “có tên” (named)! “Bọn Tây” mà, phân tích từng ly từng tí! Nói chung, có 5 nhóm dưới đây, trong mỗi nhóm còn có nhiều kỹ thuật con nữa. Sẽ lần lượt post video minh hoạ từng cái! Trong đó brace là quan trọng nhất cần phải tập đến mức nhuần nhuyễn! Nhân chu nhất thể –

hèo xuồng kayak có trên 40 kỹ thuật “có tên” (named)! “Bọn Tây” mà, phân tích từng ly từng tí! Nói chung, có 5 nhóm dưới đây, trong mỗi nhóm còn có nhiều kỹ thuật con nữa. Sẽ lần lượt post video minh hoạ từng cái! Trong đó brace là quan trọng nhất cần phải tập đến mức nhuần nhuyễn! Nhân chu nhất thể –

ôm đó, đang đạp xe từ Vũng Tàu về Sài Gòn, ngang qua Phú Mỹ thì bắt gặp “một bầy con con” này. Thể thức, kiểu dáng mấy chiếc xuồng như thế này tiếng Ạnh gọi là “pram”, không biết du nhập vào bằng cách nào, nhưng truyền thống Việt Nam không thấy có…

ôm đó, đang đạp xe từ Vũng Tàu về Sài Gòn, ngang qua Phú Mỹ thì bắt gặp “một bầy con con” này. Thể thức, kiểu dáng mấy chiếc xuồng như thế này tiếng Ạnh gọi là “pram”, không biết du nhập vào bằng cách nào, nhưng truyền thống Việt Nam không thấy có…

inh dưỡng hiện đại, cái gì mà low-carb, high-carb, loạn cào cào cả lên, I don’t give a damn about it! Tuy nhiên, có một sự khác biệt rõ ràng giữa “ăn để đẹp” và “ăn để vận động”, 2 cái hoàn toàn khác nhau, không nên nhầm lẫn, 6 múi, cơ bắp cuồn cuộn, quá nhiều cơ là sẽ khó vận động bền và lâu dài, vì cuối cùng chỉ quy về calories thôi!

inh dưỡng hiện đại, cái gì mà low-carb, high-carb, loạn cào cào cả lên, I don’t give a damn about it! Tuy nhiên, có một sự khác biệt rõ ràng giữa “ăn để đẹp” và “ăn để vận động”, 2 cái hoàn toàn khác nhau, không nên nhầm lẫn, 6 múi, cơ bắp cuồn cuộn, quá nhiều cơ là sẽ khó vận động bền và lâu dài, vì cuối cùng chỉ quy về calories thôi!

ấy năm trước đóng xong chiếc H3, hạ thuỷ chèo thử, có một ku lại làm quen, tò mò các kiểu! Kéo xuồng về nhà, các chi tiết inox đều mủn nát ra như bột cả, là bị ăn mòn bởi axit! Chi tiết nhỏ nhưng cho biết là ai phá, và thằng phá nó làm nghề gì, vì chỉ có đám trộm cắp, phá khoá chuyên nghiệp mới biết dùng những thủ thuật như thế!

ấy năm trước đóng xong chiếc H3, hạ thuỷ chèo thử, có một ku lại làm quen, tò mò các kiểu! Kéo xuồng về nhà, các chi tiết inox đều mủn nát ra như bột cả, là bị ăn mòn bởi axit! Chi tiết nhỏ nhưng cho biết là ai phá, và thằng phá nó làm nghề gì, vì chỉ có đám trộm cắp, phá khoá chuyên nghiệp mới biết dùng những thủ thuật như thế!

a mươi tháng tư là ngày thống nhất (VN), cũng đồng thời là ngày chiến thắng fascism (LX). Liên khúc 6 bài hát quen thuộc, nổi tiếng nhất của “The Red Army”, trừ bài cuối, mỗi bài đại diện cho một binh / quân chủng: 1. We’re the army of the people, 2. Three tankists, 3. March of Stalin artillery, 4. Avia march, 5. If you’ll be lucky, 6. Let’s go!

a mươi tháng tư là ngày thống nhất (VN), cũng đồng thời là ngày chiến thắng fascism (LX). Liên khúc 6 bài hát quen thuộc, nổi tiếng nhất của “The Red Army”, trừ bài cuối, mỗi bài đại diện cho một binh / quân chủng: 1. We’re the army of the people, 2. Three tankists, 3. March of Stalin artillery, 4. Avia march, 5. If you’ll be lucky, 6. Let’s go! gồi nhìn 18.5 tỷ này mãi thôi, cô gái đang làm thơ, trang giấy cho thấy 2 chữ:

gồi nhìn 18.5 tỷ này mãi thôi, cô gái đang làm thơ, trang giấy cho thấy 2 chữ:

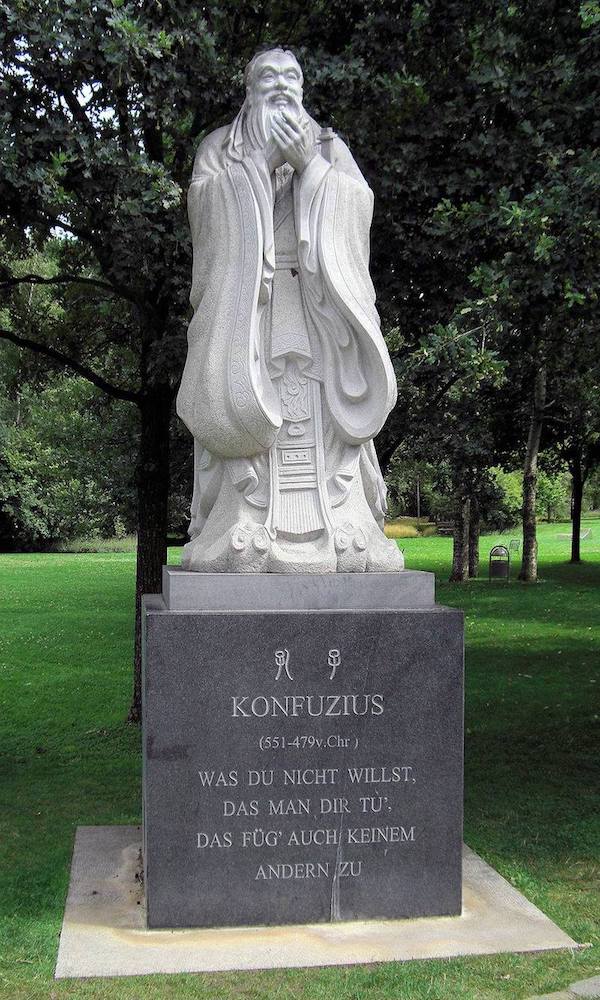

ại nói truyện chưởng Kim Dung, Thiên Long bát bộ, hồi 58, 59. Nguyên lai, Tô Tinh Hà của Tiêu Dao phái có 8 đệ tử gọi là Hàm Cốc bát hữu, 8 vị này tuy võ công không cao, nhưng mỗi người có những “tuyệt nghệ” riêng, người chơi đàn, kẻ đánh cờ, người đọc sách… Trong Hàm Cốc bát hữu đó, hàng thứ 3 gọi là Cẩu Độc, cái tên này có nghĩa là: bạ cái gì cũng đọc, đọc vô số sách vở, cứ mở miệng ra là: “Tử viết, Tử viết” (Khổng tử nói thế này, thế kia…), luôn mồm trích dẫn điển tích, tầm chương trích cú. Hai hồi này trong Thiên Long bát bộ có rất nhiều “Tử viết, Tử viết”, thật là một màn trào phúng, châm biếm rất thú vị!

ại nói truyện chưởng Kim Dung, Thiên Long bát bộ, hồi 58, 59. Nguyên lai, Tô Tinh Hà của Tiêu Dao phái có 8 đệ tử gọi là Hàm Cốc bát hữu, 8 vị này tuy võ công không cao, nhưng mỗi người có những “tuyệt nghệ” riêng, người chơi đàn, kẻ đánh cờ, người đọc sách… Trong Hàm Cốc bát hữu đó, hàng thứ 3 gọi là Cẩu Độc, cái tên này có nghĩa là: bạ cái gì cũng đọc, đọc vô số sách vở, cứ mở miệng ra là: “Tử viết, Tử viết” (Khổng tử nói thế này, thế kia…), luôn mồm trích dẫn điển tích, tầm chương trích cú. Hai hồi này trong Thiên Long bát bộ có rất nhiều “Tử viết, Tử viết”, thật là một màn trào phúng, châm biếm rất thú vị!