There’s the change that I’m really worried about: that the way a lot of programming goes today isn’t any fun because it’s just plugging in magic incantations – combine somebody else’s software and start it up. It doesn’t have much creativity. I’m worried that it’s becoming too boring because you don’t have a chance to do anything much new… The problem is that coding isn’t fun if all you can do is call things out of a library, if you can’t write the library yourself. If the job of coding is just to be finding the right combination of parameters, that does fairly obvious things, then who’d want to go into that as a career? (Coders at Work – Peter Siebel, quoting Donald Knuth)

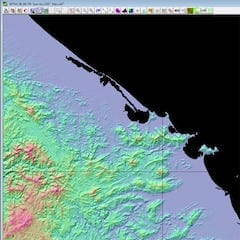

Ví dụ về chức năng đơn giản nhất: resize ảnh trên các browsers, ảnh trên: Firefox, ảnh dưới: Chrome, (ảnh được chụp lại và hiển thị nguyên kích cỡ từ 2 browser, cả 2 dùng cùng một ảnh gốc kích thước lớn). Có thể thấy chức năng resize ảnh của Firefox, IE kém rất xa so với các browser khác.

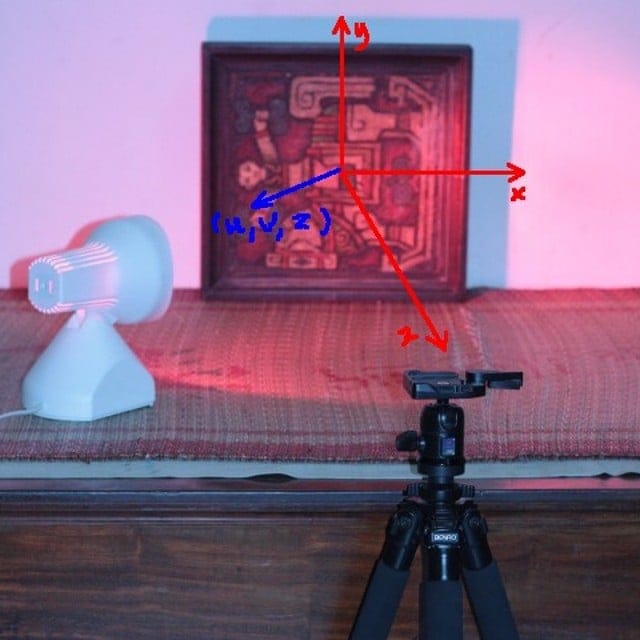

hatever happened to programming? Điều gì đã xảy ra với lập trình? Khi ở năm 1 đại học (1998), tôi có viết một 3D engine đơn giản bằng Borland C, lúc đó sách vở thiếu, internet thì chưa có. Mục tiêu đặt ra rất đơn giản: hiển thị (xoay) một đối tượng 3D đọc từ file mô tả tọa độ các đỉnh. Bằng những hình dung hình học đơn giản, tôi xây dựng một phép chiếu: giao điểm của đường thẳng từ mắt tới các đỉnh của vật thể với mặt phẳng chiếu chính là pixel được hiển thị trên màn hình. Sau đó ít lâu đọc thêm tài liệu, tôi mới biết đó gọi là: phép chiếu phối cảnh – perspective projection. Một số đoạn code released từ game Doom giúp tôi hiểu rõ hơn kỹ thuật chiếu dùng trong game thực: ray casting.

hatever happened to programming? Điều gì đã xảy ra với lập trình? Khi ở năm 1 đại học (1998), tôi có viết một 3D engine đơn giản bằng Borland C, lúc đó sách vở thiếu, internet thì chưa có. Mục tiêu đặt ra rất đơn giản: hiển thị (xoay) một đối tượng 3D đọc từ file mô tả tọa độ các đỉnh. Bằng những hình dung hình học đơn giản, tôi xây dựng một phép chiếu: giao điểm của đường thẳng từ mắt tới các đỉnh của vật thể với mặt phẳng chiếu chính là pixel được hiển thị trên màn hình. Sau đó ít lâu đọc thêm tài liệu, tôi mới biết đó gọi là: phép chiếu phối cảnh – perspective projection. Một số đoạn code released từ game Doom giúp tôi hiểu rõ hơn kỹ thuật chiếu dùng trong game thực: ray casting.

Sau đó 1 thời gian bắt đầu có internet, chúng tôi lại “say mê” tìm hiểu các kỹ thuật graphics “tối tân” hơn. Còn nhớ lúc đó có trang web winasm.net, nơi chỉ vẻ rất nhiều về Gouroud, Phong shading, light, bump mapping, sprites… Chúng tôi đọc các code viết chủ yếu bằng Assembly, rồi viết lại bằng C/C++ trong cái “engine” của mình. Chuyện là như thế, chúng tôi “nghĩ gì viết nấy”, đa số các trường hợp là “phát minh lại cái bánh xe”, bắt đầu bằng việc tự xây dựng những mô hình, thuật toán “ngây thơ” trong đầu. Cái “engine” của tôi so với các engine chuyên nghiệp bây giờ thì thật đáng xấu hổ, nhưng ít nhất chúng tôi hiểu những ý tưởng, nguyên tắc vận hành cơ bản.

Những điều kể ra trên đây chỉ mới là a, b, c… trong computer graphics, lĩnh vực có rất rất nhiều thuật toán, kỹ xảo, mánh khóe… hấp dẫn và độc đáo. Còn nhớ vì ham thích viết các chương trình đồ họa và xử lý ảnh nên một số môn khác tôi không chú ý tới. Như các môn AI, có lần tôi copy code của một người bạn về nộp làm bài tập (đã được cho phép). Đó chỉ là một chương trình chơi caro (croix-zero) dùng thuật toán A*, nhưng vì cúp cua nên tôi không biết về A*, ngồi cả đêm đọc xem cái chương trình đơn giản đó làm cái gì nhưng vẫn không tài nào hiểu được. Sau đó “bổ túc kiến thức” thì biết là A* cũng chẳng phải là điều gì phức tạp, nhưng nếu không có ý tưởng về thuật toán trong đầu… thì code cũng như một đám rừng chẳng cho anh biết được gì về nó.

Sau đó tôi viết nhiều về xử lý ảnh, tới đây thì nền tảng toán trở nên quan trọng, đa số các khái niệm là đủ phức tạp để không tự hình dung bằng trực quan trực giác được. Nhưng nghiên cứu kỹ lưỡng chút thì rồi chúng tôi cũng hiểu được ý tưởng đằng sau các khái niệm convolution, high – pass, low – pass filters… Nhờ những kiến thức đó mà bây giờ tôi có thể có được những nhận định đúng hơn, ví dụ như một chức năng tưởng chừng hết sức đơn giản như resize ảnh, nhưng cho đến tận bây giờ vẫn chưa được implement đúng trên hầu hết các trình xử lý ảnh phổ biến: GIMP, Photoshop… Có thể các bạn không tin điều này, nhưng GIMP, Photoshop, Firefox… resize ảnh bằng giải thuật tuyến tính nên có thể bỏ đi những thông tin quan trọng, và giữ lại những pixel không quan trọng.

Một số kỹ sư phần mềm nói với tôi: suốt nhiều năm đi làm chưa bao giờ anh ta phải cài đặt một thuật toán nào đã học ở trường, việc lập trình hiện tại chỉ là lắp ghép các module, library ở high – level, rất ít khi đụng đến bản chất thật low – level bên dưới. Tôi cũng đồng ý như thế, một số dự án đơn giản là làm việc ở high – level, không phải sản phẩm nào cũng đòi hỏi kỹ năng sáng tạo, đòi hỏi kỹ sư có hiểu biết sâu về các thuật toán chuyên biệt. Nhưng nói như vậy không có nghĩa là những kỹ năng đó không cần thiết và không quan trọng. Nếu không có những kỹ năng đó thì mãi mãi chúng ta chỉ làm được những công việc “làng nhàng”, thậm chí những công việc “làng nhàng” cũng đòi hỏi những khả năng know – how, tổ chức code nhất định. Trong nghề lập trình tôi vẫn ưa thích kiểu người hay “đi phát minh lại cái bánh xe”.

Thế nên mới biết là những điều nói ra càng đơn giản thì lại càng không đơn giản! Nhiều engineer trẻ tôi gặp sau này thường không có sự quan tâm tới những yếu tố đơn giản và cơ bản như thế. Dĩ nhiên những điều trên đây chẳng có gì to tát, cũng như đa số các vấn đề lập trình cũng không phải là điều gì lớn lao. Nhưng trước hết hãy cho tôi thấy bạn có hiểu biết cơ bản về vấn đề mình đang làm, đừng gọi hai vòng lặp for lồng nhau là: “thuật toán”, hãy có khả năng đọc hiểu mô tả của thuật toán và biến nó thành code chạy tốt… trước khi phán đó là những điều cơ bản không cần để ý tới. Tất cả bắt đầu với suy nghĩ của chính mình, với cái mô hình và trình tự trong đầu mình, chứ không phải bắt đầu với danh sách các khái niệm, chữ nghĩa, API… mà anh thậm chí không hiểu nội dung đằng sau nó! Sau đó thì mới có thể nói đến ý tưởng, điểm mạnh điểm yếu, chỗ dùng được (không dùng được) và sự khác biệt của các công nghệ!

Một cách rất quan trọng để hiểu công nghệ không phải là từ thuật toán, ý tưởng, mà là từ… lịch sử. Nếu quan tâm đọc lịch sử computer graphics, bạn sẽ biết đằng sau “Phong shading” là Bùi Tường Phong, một người Việt. Hay bạn sẽ hiểu tại sao SGI (Silicon Graphics Inc.) có nhiều ảnh hưởng đến thế, tại sao những phong cách, sản phẩm của công ty đó lại thiết đặt nên những tiêu chuẩn vàng trong cộng đồng computer graphics (ví dụ như OpenGL), tại sao máy Mac dùng UEFI chứ không dùng BIOS, tại sao NextStep và OpenStep dùng “vector display” chứ không phải là “bitmap display” như đa số các windowing system khác… Sự phát triển của công nghệ là một quá trình tiến hóa liên quan tới những yếu tố xã hội và con người, chứ không đơn giản là sự phức tạp hóa các ý tưởng kỹ thuật!

game to be published this October, to be precise, the first Vietnamese FPS (first person shooter) 3D game, and you know what it’s about, the famous Battle of Điện Biên Phủ. The game features 4 main characters, all joined the anti – colonial – French movements from the very early days, participated in various operations before arriving at the final confrontation at Điện Biên Phủ. Not yet to be released, but below are some nice preview screenshots. Having some experiences with game engines, I could say that PC 3D games nowadays are not that hard as before.

game to be published this October, to be precise, the first Vietnamese FPS (first person shooter) 3D game, and you know what it’s about, the famous Battle of Điện Biên Phủ. The game features 4 main characters, all joined the anti – colonial – French movements from the very early days, participated in various operations before arriving at the final confrontation at Điện Biên Phủ. Not yet to be released, but below are some nice preview screenshots. Having some experiences with game engines, I could say that PC 3D games nowadays are not that hard as before.

ector graphics

ector graphics

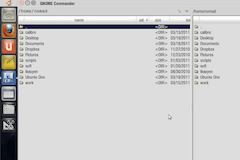

am trying Ubuntu’s new desktop introduced lately with Natty Narwhal (11.04). After heavy development phases,

am trying Ubuntu’s new desktop introduced lately with Natty Narwhal (11.04). After heavy development phases,

inished with my survey on vectorial graphics, in details, about rendering SVG using Quartz, OpenGL (ES) on Mac, iOS and some Android flatforms. I’d had the chances to systematize more my knowledge on vectorial: path, stroke, anti – aliasing, solid, gradient and pattern fill, etc… Todays, people’s all talking about 3D, OpenGL, DirectX, etc… While few mentions much about 2D stuffs, I’ve traced back some historical evolution paths, since I believe that it’s through history would we understand technologies.

inished with my survey on vectorial graphics, in details, about rendering SVG using Quartz, OpenGL (ES) on Mac, iOS and some Android flatforms. I’d had the chances to systematize more my knowledge on vectorial: path, stroke, anti – aliasing, solid, gradient and pattern fill, etc… Todays, people’s all talking about 3D, OpenGL, DirectX, etc… While few mentions much about 2D stuffs, I’ve traced back some historical evolution paths, since I believe that it’s through history would we understand technologies.

hatever happened to programming? Điều gì đã xảy ra với lập trình? Khi ở năm 1 đại học (1998), tôi có viết một 3D engine đơn giản bằng Borland C, lúc đó sách vở thiếu, internet thì chưa có. Mục tiêu đặt ra rất đơn giản: hiển thị (xoay) một đối tượng 3D đọc từ file mô tả tọa độ các đỉnh. Bằng những hình dung hình học đơn giản, tôi xây dựng một phép chiếu: giao điểm của đường thẳng từ mắt tới các đỉnh của vật thể với mặt phẳng chiếu chính là pixel được hiển thị trên màn hình. Sau đó ít lâu đọc thêm tài liệu, tôi mới biết đó gọi là: phép chiếu phối cảnh – perspective projection. Một số đoạn code released từ game Doom giúp tôi hiểu rõ hơn kỹ thuật chiếu dùng trong game thực:

hatever happened to programming? Điều gì đã xảy ra với lập trình? Khi ở năm 1 đại học (1998), tôi có viết một 3D engine đơn giản bằng Borland C, lúc đó sách vở thiếu, internet thì chưa có. Mục tiêu đặt ra rất đơn giản: hiển thị (xoay) một đối tượng 3D đọc từ file mô tả tọa độ các đỉnh. Bằng những hình dung hình học đơn giản, tôi xây dựng một phép chiếu: giao điểm của đường thẳng từ mắt tới các đỉnh của vật thể với mặt phẳng chiếu chính là pixel được hiển thị trên màn hình. Sau đó ít lâu đọc thêm tài liệu, tôi mới biết đó gọi là: phép chiếu phối cảnh – perspective projection. Một số đoạn code released từ game Doom giúp tôi hiểu rõ hơn kỹ thuật chiếu dùng trong game thực:

ecently, the wonderful

ecently, the wonderful